Hi there, internet friends  . The time has come for yet another tutorial, where we will learn how to breathe life to the scene even more by applying textures to our objects! The textures will make our scene far more realistic than just plain colors and it's one of the most important techniques in the 3D graphics. So let's not waste time with foreword and begin right away!

. The time has come for yet another tutorial, where we will learn how to breathe life to the scene even more by applying textures to our objects! The textures will make our scene far more realistic than just plain colors and it's one of the most important techniques in the 3D graphics. So let's not waste time with foreword and begin right away!

Before we are able to map a texture on the object, we have to be able to load image from file and then give the loaded data into OpenGL. OpenGL itself does not provide any facilities for loading images, so it's only up to your choice what you decide to go with (long time ago, there was a library called glaux, which stands for gl auxilliary, and it supported loading BMP files, hope that some of you remember this ancient library  ).

).

For the purpose of these tutorials, I have decided to go with stb libraries, which is a set of header only libraries, one of which is called stb_image and it's for image loading. It seems to be really complete and supports all major graphics formats, whiich is exactly what we need. Furthermore, it's header-only, which practically means we have to just include it and we're free from hassle with setting up libraries  . I have set everything up for you, but there is this one thing you must do on your own - call this git command again, to clone the stb repository:

. I have set everything up for you, but there is this one thing you must do on your own - call this git command again, to clone the stb repository:

Now that you've done this, we're ready to start with creating a class, that will hold the texture object:

class Texture

{

public:

bool loadTexture2D(const std::string& fileName, bool generateMipmaps = true);

void bind(int textureUnit = 0) const;

void deleteTexture();

int getWidth() const;

int getHeight() const;

int getBytesPerPixel() const;

private:

GLuint _textureID = 0;

int _width = 0;

int _height = 0;

int _bytesPerPixel = 0;

bool _isLoaded = false;

bool isLoadedCheck() const;

};To keep the tradition, I will again list the methods and briefly explain what they do. Most of them are pretty self-explanatory though:

The most important function here is to correctly load the texture from file, so let's go through the code of loadTexture2D function:

bool Texture::loadTexture2D(const std::string& fileName, bool generateMipmaps)

{

stbi_set_flip_vertically_on_load(1);

const auto imageData = stbi_load(fileName.c_str(), &_width, &_height, &_bytesPerPixel, 0);

if (imageData == nullptr)

{

std::cout << "Failed to load image " << fileName << "!" << std::endl;

return false;

}

glGenTextures(1, &_textureID);

glBindTexture(GL_TEXTURE_2D, _textureID);

GLenum internalFormat = 0;

GLenum format = 0;

if(_bytesPerPixel == 4) {

internalFormat = format = GL_RGBA;

}

else if(_bytesPerPixel == 3) {

internalFormat = format = GL_RGB;

}

else if(_bytesPerPixel == 1) {

internalFormat = format = GL_ALPHA;

}

glTexImage2D(GL_TEXTURE_2D, 0, internalFormat, _width, _height, 0, format, GL_UNSIGNED_BYTE, imageData);

if (generateMipmaps) {

glGenerateMipmap(GL_TEXTURE_2D);

}

stbi_image_free(imageData);

_isLoaded = true;

return true;

}

First thing we have to do is to call this function - stbi_set_flip_vertically_on_load(1). Otherwise, our texture would be flipped. Why is it like that? OpenGL expects texture data not exactly as you would expect probably - when we provide the data, it actually wants the data in the bottom-up manner, just like Y-axis. If you're looking at an image, you are actually normally looking (and reading text as well) in top-down manner. I don't know exactly why it's like that, probably OpenGL guys have decided that they want to have everything really consistenly, so they just did it like this  .

.

Now the actual loading is performed by calling stbi_load(fileName.c_str(), &_width, &_height, &_bytesPerPixel, 0). This function returns pointer to the raw color data of the image, pixel by pixel. Some metadata about the image, such as width, height and bytes per pixel are stored in the corresponding variables. The last parameter of that function - zero - is to force the number of components per pixel, but setting it to 0 will just load the image as-is. However, if that function returns nullptr however, it means that the image loading has failed, so we don't proceed at all.

If the previous method hasn't failed, we have now to convert this raw pointer to pixel data to OpenGL texture. That's why we start with generating texture object by calling glGenTextures. Now we must bind it to tell OpenGL we are gonna work with this one, by calling glBindTexture. Its parameters are target, which can be GL_TEXTURE_1D, GL_TEXTURE_2D, GL_TEXTURE_3D, or even some other parameters - for complete list, refer to the glBindTexture Reference Page. In this tutorial, we just stick to 2D textures, so the target is GL_TEXTURE_2D. Second parameter is texture object ID generated previously.

Creating texture object is one thing, filling it with data is another. First of all, we have to determine, what format we have. In this simple version of texture loading, I am just looking at how many bytes per pixel have been used in the loaded image to store it and according to this information, we either have GL_RGBA, GL_RGB or GL_DEPTH_COMPONENT. Now we're ready to call the function glTexImage2D, which also deserves a bit of explanation. It has multiple parameters, so let's go through them in their order:

Wow, one function, but 9 parameters, that's really much! Luckily, there's no need to remember them in order, if you need to use it, always consult specification. Important thing is that you understand what this function does  .

.

We're almost finished with loading texture, but there is one more thing we might need to do, and that is generating mipmaps. It's super easy, we just have to call glGenerateMipmap(GL_TEXTURE_2D) and OpenGL does this for us! At the very end, we have to free the memory pointer containing image data, now that OpenGL has copied them over and that's it!

It's really nice, that we have loaded and created texture, like really cool. But before we actually use them, we have to learn, what texture filtering and sampler is.

When we want to actually display OpenGL texture data on object, we must also tell it, how to FILTER the texture. What does this mean? It's simply the way how OpenGL takes colors from image and draws them onto a polygon. Since we will probably never map texture pixel-perfect (the polygon's on-screen pixel size is the same as texture size), we need to tell OpenGL which texels (single pixels of a texture) to take. There are several texture filterings available. They are defined for both minification and magnification. What does this mean? Well, first imagine a wall, that we are looking at, and its rendered pixel size is the same as our texture size (256x256):

Easy. In this case, every pixel has a corresponding texel, so there's not much to care about. But if we move closer to the wall, then we need to MAGNIFY the texture - because there are now more pixels on screen than texels in texture, we must tell OpenGL how to fetch the values from texture.

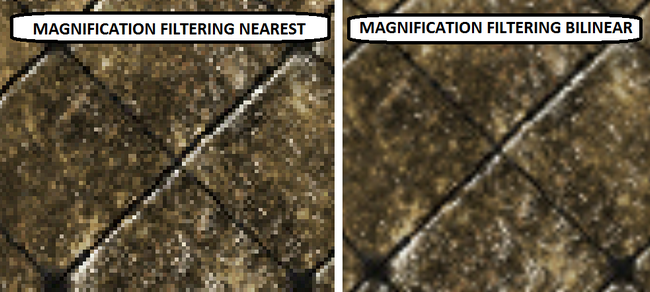

NEAREST FILTERING: GPU will simply take the texel, that is nearest to exactly calculated point. This one is very fast, as no additional calculations are performed, but its quality is also very low, since multiple pixels have the same texels, and the visual artifacts are very bold. The closer to the wall you are, the more "squary" it looks (many squares with different colors, each square represents a texel).

BILINEAR FILTERING: This one doesn't only get the closest texel, but rather it calculates the distances from all 4 adjacent texels and retrieves weighted average, depending on the distance. This results in a lot better quality than nearest filtering, but requires a little more computational time (on modern hardware, this time is negligible). The following picture depicts it really clearly:

If we would have moved further from the wall, the texture would be bigger than the rendered size of our simple wall and thus the texture must be MINIFIED. The problem is, that now multiple texels may correspond to single fragment. And what shall we do now? One solution may be to average all corresponding texels, but this may be really slow, as whole texture might potentionally fall into single pixel. The nice solution to this problem is called MIPMAPPING. The original texture is stored not only in its original size, but also downsampled to all smaller resolutions, with each coordinate divided by 2, creating a "pyramid" of textures (this image is taken from Wikipedia):

Those particular sub-images are called mipmaps. With mipmapping enabled, GPU selects a mipmap of appropriate size, according to the distance we see object from, and then perform some filtering. This results in higher memory consumption (exactly by 33%, as sum of 1/4, 1/16, 1/256... converges to 1/3), but gives nice visual results at very nice speed. And here comes yet another filtering you might have heard of - TRILINEAR filtering. It's almost the same as bilinear filtering, but there is one more feature to it and it's that it takes two nearest mipmaps, does the bilinear filtering on each of them, and then averages results. The name TRIlinear is from the third dimension that comes into it - in case of bilinear we were finding fragments in two dimensions, trilinear filtering extends this to three dimensions.

OpenGL object, that holds information about used texture filtering is called sampler. Sampling is the process of fetching a value from a texture at given position, so basically sampler is an object where we remember how we do it (all filtering parameters). As with all OpenGL objects, samplers are generated first (so we get its ID) using glGenerateSamplers function, and then we work with them using those generated IDs. So in general, if you want to affect quality of the texture objects, all you have to do is to switch sampler to change filtering  .

.

To keep everything systematic, I have created a Sampler class, which holds everything that we need:

enum MagnificationFilter

{

MAG_FILTER_NEAREST, // Nearest filter for magnification

MAG_FILTER_BILINEAR, // Bilinear filter for magnification

};

enum MinificationFilter

{

MIN_FILTER_NEAREST, // Nearest filter for minification

MIN_FILTER_BILINEAR, // Bilinear filter for minification

MIN_FILTER_NEAREST_MIPMAP, // Nearest filter for minification, but on closest mipmap

MIN_FILTER_BILINEAR_MIPMAP, // Bilinear filter for minification, but on closest mipmap

MIN_FILTER_TRILINEAR, // Bilinear filter for minification on two closest mipmaps, then averaged

};

class Sampler

{

public:

void create();

void bind(int textureUnit = 0) const;

void deleteSampler();

void setMagnificationFilter(MagnificationFilter magnificationFilter) const;

void setMinificationFilter(MinificationFilter minificationFilter) const;

void setRepeat(bool repeat) const;

private:

GLuint _samplerID = 0;

bool _isCreated = false;

bool createdCheck() const;

};

I will go through available methods:

I really recommend you to examine those methods by yourself, for example what function calls need to be issued to set filtering of a sampler correctly. As usual, you don't have to remember it, you can always look to the specifications (or even my tutorials  ), but important thing is that you understand what this is about

), but important thing is that you understand what this is about  .

.

Now that we know about filterings and samplers, it's time to move to the actual texture mapping.

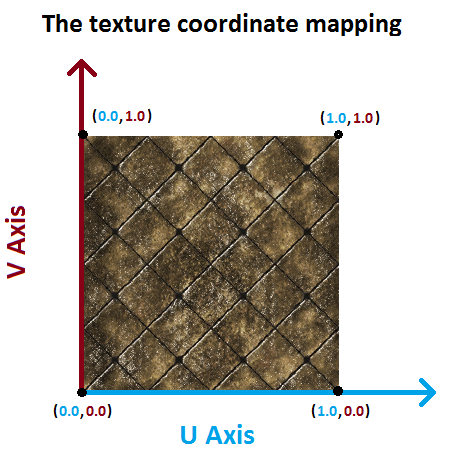

In order to map texture onto the objects, we have to provide Texture coordinates (sometimes also referred to as UV coordinates). They tell, how to map texture along the polygon. We just need to provide appropriate texture coordinates with every vertex and we're done. In our 2D texture case, texture coordinate will be represented by two numbers, one along X axis (U coordinate), and one along Y Axis (V Coordinate):

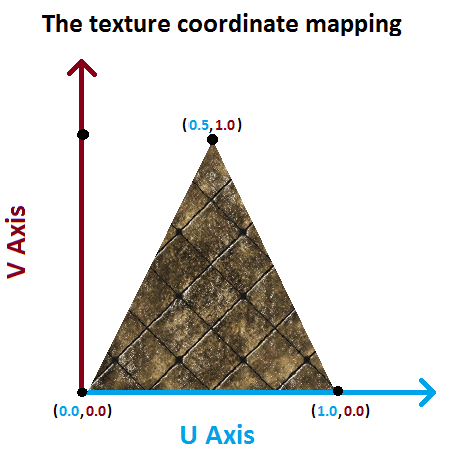

So if we would like to map our texture to quad, we would simply provide (0.0, 1.0) coordinates to upper left vertex, (1.0, 1.0) to upper right vertex, (1.0, 0.0) to bottom-right vertex and (0.0, 0.0) to bottom-left vertex. But what if we wanted to map texture to let's say a triangle? Well, you probably may guess now, and this picture will demonstrate it:

We simply need to copy the shape of our polygon also in texture coordinates in order to map texture properly. If we exceed the <0..1> range, our texture gets mapped more times, let's say, if we mapped coordinates (0.0, 10.0), (10.0, 10.0), (10.0, 0.0) and (0.0, 0.0) to the quad, texture would be mapped 10 times on X Axis and 10 times on Y Axis. This texture repeating is default behavior, it can be turned off by calling setRepeat(false) function on sampler  .

.

Now that we know how to use texture coordinates, we must learn how to use them by rendering. It's actually pretty simple - texture coordinate is just another vertex attribute. So when we have our VAOs, that hold VBO with vertex positions, we can create yeat another VBO, that holds texture coordinates! However, this time the difference is, that the vertex attribute index is 1 (0 is for position), we have only two floats per vertex (U and V coordinate) and thus also offset between two texture coordinates is sizeof(glm::vec2), or size of two floats. The following code in the initalizeScene() shows just that:

void initializeScene()

{

// ...

glGenVertexArrays(1, &mainVAO); // Creates one Vertex Array Object

glBindVertexArray(mainVAO);

// Setup vertex positions first

shapesVBO.createVBO();

shapesVBO.bindVBO();

shapesVBO.addData(static_geometry::plainGroundVertices, sizeof(static_geometry::plainGroundVertices));

shapesVBO.addData(static_geometry::cubeVertices, sizeof(static_geometry::cubeVertices));

shapesVBO.addData(static_geometry::pyramidVertices, sizeof(static_geometry::pyramidVertices));

shapesVBO.uploadDataToGPU(GL_STATIC_DRAW);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, sizeof(glm::vec3), (void*)0);

// Setup texture coordinates next

texCoordsVBO.createVBO();

texCoordsVBO.bindVBO();

texCoordsVBO.addData(static_geometry::plainGroundTexCoords, sizeof(static_geometry::plainGroundTexCoords));

texCoordsVBO.addData(static_geometry::cubeTexCoords, sizeof(static_geometry::cubeTexCoords), 6);

texCoordsVBO.addData(static_geometry::pyramidTexCoords, sizeof(static_geometry::pyramidTexCoords), 4);

texCoordsVBO.uploadDataToGPU(GL_STATIC_DRAW);

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 2, GL_FLOAT, GL_FALSE, sizeof(glm::vec2), (void*)0);

// ...

}Now, that we have data in the GPU, we'll also need to add few lines into shaders as well. The first thing we must do, is to pass texture coordinate, that is an input variable in vertex shader, further to fragment shader:

#version 440 core

uniform struct

{

mat4 projectionMatrix;

mat4 viewMatrix;

mat4 modelMatrix;

} matrices;

layout(location = 0) in vec3 vertexPosition;

layout(location = 1) in vec2 vertexTexCoord;

smooth out vec3 ioVertexColor;

smooth out vec2 ioVertexTexCoord;

void main()

{

mat4 mvpMatrix = matrices.projectionMatrix * matrices.viewMatrix * matrices.modelMatrix;

gl_Position = mvpMatrix * vec4(vertexPosition, 1.0);

ioVertexTexCoord = vertexTexCoord;

}Then, in the fragment shader, we'll have to add two new things:

#version 440 core

layout(location = 0) out vec4 outputColor;

smooth in vec3 ioVertexColor;

smooth in vec2 ioVertexTexCoord;

uniform sampler2D gSampler;

uniform vec4 color;

void main()

{

vec4 texColor = texture(gSampler, ioVertexTexCoord);

outputColor = texColor*color;

}

First is uniform sampler2D variable in fragment shader. With this variable, we will fetch texture data based on texture coordinates. From program, we just need to assign sampler variable one integer, that representes Texture Unit. For today, it's always 0, but it will be covered in next tutorial about multitexturing  .

.

The second thing is to obtain texel value itself. This is done by calling function texture, that takes sampler variable as first parameter, and texture coordinate as second parameter. The texture coordinate ioVertexTexCoord is now per-fragment interpolated coordinate and it came from the vertex shader  .

.

So to summarize, in order to render textured object, we have to add texture coordinates to some VBO, load the texture itself and create sampler. This all is done in the initializeScene() function. I don't want to paste the codes here, just look it up by opening the code of the tutorial, should be pretty easy to read  .

.

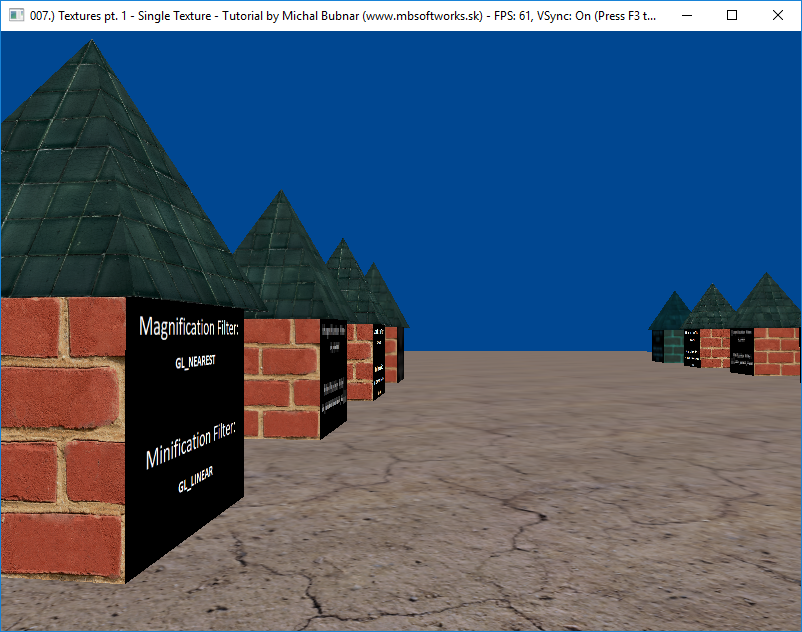

This is what has been achieved today:

As you can see, I am rendering multiple houses, each house uses different sampler for rendering, and if you observe the houses closely, you will see the differences of different filterings  .

.

But wait, there's more! You can see, that one of the houses is painted blue. The blue house is the one we filter the ground texture with. By entering the house, you are able to change the filtering of the ground too  ! You can see, how great impact has trilinear filtering for minification and nearest filtering for example, the difference is unbelievable! In the next tutorial - Multitexturing - you will discover what is a texture unit and how to use multiple textures on the same object!

! You can see, how great impact has trilinear filtering for minification and nearest filtering for example, the difference is unbelievable! In the next tutorial - Multitexturing - you will discover what is a texture unit and how to use multiple textures on the same object!

Download 2.22 MB (1174 downloads)

Download 2.22 MB (1174 downloads)