Welcome to the 14th tutorial of my OpenGL4 tutorial series! This one took a bit longer to write, I just had a lot of stuff to do and also was travelling quite a lot because of presidential elections here in Slovakia, so I haven't been much stabilized so to say  . But now I am and I am writing this article. So let's start with the basics of lighting

. But now I am and I am writing this article. So let's start with the basics of lighting  .

.

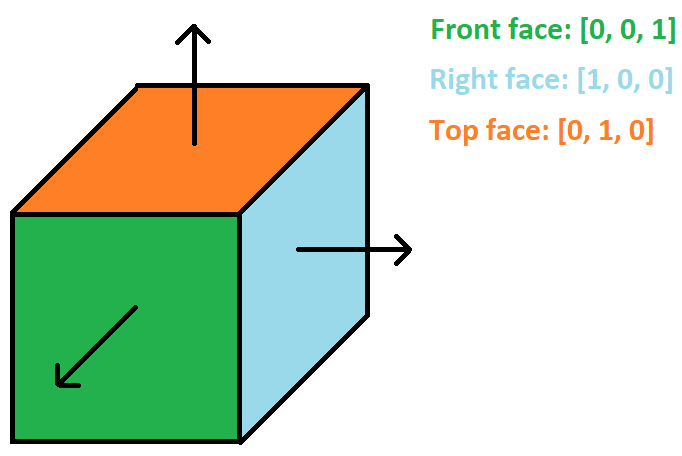

Finally, we are getting to one of the most fundamental things in 3D Graphics - normals. What are they? It's actually pretty simple - normal is a vector, that is perpendicular to the surface of an object. If you find this definition difficult and you don't understand, let's put it in simpler terms - normal is a direction (a vector), that represents where the surface "points" to. Let's show a picture of a cube, that depicts what I've just said:

As you might see, the front face of cube is pointing towards the screen, so it's normal is [0, 0, 1]. Right face is facing direction to right, so it's normal is [1, 0, 0]. And the last depicted top face is pointing towards top, so its normal is [0, 1, 0]. Analogously, we can define normals for the back, left and bottom face of the cube. One more note here is, that the length of a normal should always be 1 - this way, all calculations we will later learn about will work. So don't forget to normalize your vector (make it unit size) always!

Another aspect I consider important is this - the cube has 6 different normals. If we take front face, that consists of 2 triangles and thus 6 vertices, all of them have the same normal [0, 0, 1] actually. But that's completely fine! In graphics, you usually define the normals on a per-vertex basis. The times when it was on a per polygon basis are long over - the computational power of your everyday mobile phone can handle normals for every vertex with ease. Also notice, that those cube edge vertices are actually 3 different vertices with 3 different normals. For example top-left-front edge has one vertex from front face, one from left face and one from top face, but all of them have their own normals  .

.

Knowing this, we can now start talking about two light types, that are implemented - ambient and diffuse light.

This is the simplest type of light. Ambient light is just a light, that's been scattered everywhere so much, that we just simply consider it to be everywhere (omnipresent). Let's have a look at the fragment shader ambientLight.frag, that implements ambient light:

#version 440 core

#include_part

struct AmbientLight

{

vec3 color;

bool isOn;

};

vec3 getAmbientLightColor(AmbientLight ambientLight);

#definition_part

vec3 getAmbientLightColor(AmbientLight ambientLight)

{

return ambientLight.isOn ? ambientLight.color : vec3(0.0, 0.0, 0.0);

}

You can see, that it has two simple attributes - a color and a flag, if the light is turned on. It's packed neatly in a struct (GLSL does support it), so we keep the object-oriented programming spirit even here  . The method getAmbientLightColor at the very bottom gets the ambient light color - if the light is on, we return color, otherwise we return raw darkness

. The method getAmbientLightColor at the very bottom gets the ambient light color - if the light is on, we return color, otherwise we return raw darkness  .

.

You might have noticed two new things as well, namely #include_part and #definition_part. This is my own extension of GLSL, so that I am able to reuse the functions / structs in multiple shaders. The thing is, that if you want to use a function like getAmbientLightColor in another fragment shader, you have to at least declare it there, otherwise the linking would fail (just like in C++). So that's what I've done here - instead of having to copy / paste everything into other files, you can simply #include another shader, just like in C++! You will see this in action later in this article, when we calculate the final light of a fragment  . The implementation itself is hidden in shader.cpp, if you are interested.

. The implementation itself is hidden in shader.cpp, if you are interested.

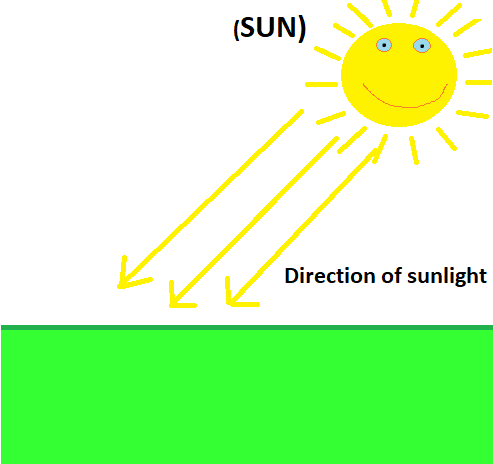

Here the things get interesting and finally normals enter the calculations! Diffuse light is pretty simple to explain, the mathematics behind it are still pretty simple, but definitely more complicated than ambient light  . First of all, we have to define our diffuse light source. This time, it isn't simply scattered everywhere like ambient light, but it has a direction. There is however no physical source of this light, it just shines in one direction and that's it. We could possibly take sun as an example - sun might have a physical location, but it's so far from the Earth that it does not matter and we consider direction of light only. This picture should make it more clear:

. First of all, we have to define our diffuse light source. This time, it isn't simply scattered everywhere like ambient light, but it has a direction. There is however no physical source of this light, it just shines in one direction and that's it. We could possibly take sun as an example - sun might have a physical location, but it's so far from the Earth that it does not matter and we consider direction of light only. This picture should make it more clear:

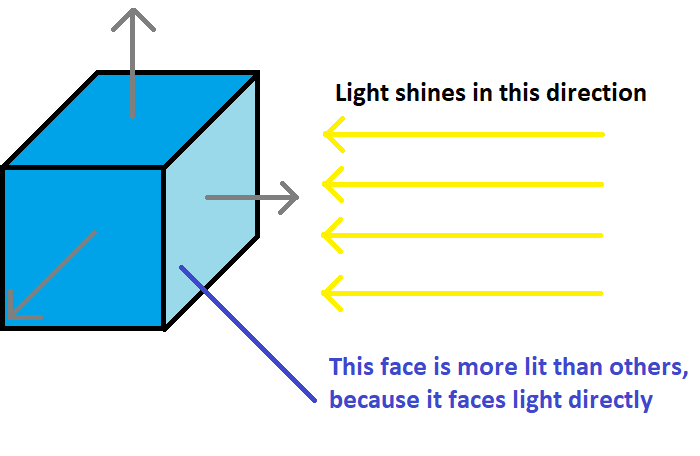

Now that we have a light with a direction, we need to calculate, how much should the particular face be lit. If the surface faces light direction perfectly, it should be fully lit. If the surface shows its back to the light direction, it should not be lit at all (only with ambient light, but not with diffuse). Anything in between is then partially lit. Another picture to explain this concept follows:

Obviously, the face on the right must be lit more than other faces, because it faces direction of light perfectly. In other words, normal and light direction are parallel vectors, although of opposite direction ( [1, 0, 0] for normal and [-1, 0, 0] for light direction). To calculate the total diffuse factor, we have to take dot product of normal and light direction. Let's have a look at what we get in this case:

dot_product = Nx*Lx + Ny*Ly + Nz*Lz

dot_product = 1*(-1) + 0*0 + 0*0

dot_product = -1

We got -1 as result. But that sounds suspicious - the face is facing the light perfectly and we got -1, which sounds to me at first sight as negative light? Your thoughts are actually correct! The really correct way to calculate diffuse factor is to take the normal and minus light direction instead! This way, we would get plus 1 instead of -1 and that would be correct! Just look:

dot_product = Nx*(-Lx) + Ny*(-Ly) + Nz*(-Lz)

dot_product = 1*1 + 0*0 + 0*0

dot_product = 1

Of course, we can still get negative results from dot products for other examples, but in that case, all we have to is to clamp calculated diffuse factor to range <0...1>.

Let's transform the ideas above to the code. Just as with ambient light, we will have a separate shader for diffuse light, together with struct and method, that does all the calculations:

#version 440 core

#include_part

struct DiffuseLight

{

vec3 color;

vec3 direction;

float factor;

bool isOn;

};

vec3 getDiffuseLightColor(DiffuseLight diffuseLight, vec3 normal);

#definition_part

vec3 getDiffuseLightColor(DiffuseLight diffuseLight, vec3 normal)

{

if(!diffuseLight.isOn) {

return vec3(0.0, 0.0, 0.0);

}

float finalIntensity = max(0.0, dot(normal, -diffuseLight.direction));

finalIntensity = clamp(finalIntensity*diffuseLight.factor, 0.0, 1.0);

return vec3(diffuseLight.color*finalIntensity);

}Now we have a bit more parameters to care about. The simple ones are again color of light and flag if it's turned on. Direction is self-explanatory, but factor isn't. Simply put, factor says how much should this diffuse light contribute to final light color. So even if we face the direction of light perfectly (and the dot product is 1), maybe we don't want to overlit the object. So we use this factor to multiply the calculated dot product in the end.

In the getDiffuseLightColor method, we calculate the diffuse light contribution using given DiffuseLight and normal. As you can see, we are calculating dot product of normal and negative light direction. But as we don't want to do negative lighting, we will clamp this value to 0.0, if it goes below. We don't have to worry about clamping dot product to 1.0, because dot product of two unit vectors is guaranteed to be max 1.0. After this dot product calculation, we still have to multiply the calculated intensity with the factor and finally return the color  .

.

Let's put all of this together in final fragment shader:

#version 440 core

#include "../lighting/ambientLight.frag"

#include "../lighting/diffuseLight.frag"

#include "../common/utility.frag"

layout(location = 0) out vec4 outputColor;

smooth in vec2 ioVertexTexCoord;

smooth in vec3 ioVertexNormal;

uniform sampler2D sampler;

uniform vec4 color;

uniform AmbientLight ambientLight;

uniform DiffuseLight diffuseLight;

void main()

{

vec3 normal = normalize(ioVertexNormal);

vec4 textureColor = texture(sampler, ioVertexTexCoord);

vec4 objectColor = textureColor*color;

vec3 lightColor = sumColors(getAmbientLightColor(ambientLight), getDiffuseLightColor(diffuseLight, normal));

outputColor = objectColor+vec4(lightColor, 1.0);

}On the very top, you can see those include directives - what they do is they include everything from another shader file that is in #include_part - so only structs and declarations. Furthermore, several uniform variables follow including ambient and diffuse light. As you can see in the main method, we calculate the final color of the object in several steps. First, we take the interpolated normal and normalize it. Then, we take texel color and mix it with object color, as we've been doing always.

And now comes the interesting part - we sum colors of ambient light and diffuse light using method sumColors, which comes from included ../common/utility.frag shader. The problem with summing multiple lights is, that they might also go out of (0.0, 0.0, 0.0) ... (1.0, 1.0, 1.0) range and we should clamp them. That's what this function does - it sums two colors and returns clamped result, so that the sum doesn't result in invalid color  .

.

The very last step is now mixing the objectColor with the lightColor. But this time, we don't sum, but multiply - imagine you have white object and dark light, the sum would still result in white object being visible, but in the darkness you should not see it. Don't forget, that all of this is calculated on a per vertex-basis, so if 3 vertices of triangle have 3 different colors calculated (for example while rendering torus' faces), they are smoothly interpolated between all fragments, which creates this beautiful shading effect  .

.

And I will tell you a secret now - what we've just implemented is that legendary Phong Shading you have surely heard of! And you didn't even know it  !

!

One last and super important thing to mention is normal matrix. It's pretty normal  , that we use rotations, scaling or whatever translations with our object before we render it. But if we rotate object by 90 degrees on Y axis, don't we have to do the same thing with normal? Yes we do! That's really important actually and that's where normal matrices come to save the day! Normal matrix is a matrix used to multiply normals with and to adjust them to the transformations, that have been done. To calculate it, we have to take our model matrix and take inverse of transpose of the 3x3 part of the model matrix. There is a bit of theory behind those calculations, which I am too lazy to explain, but you can find numerous resources about it on the internet

, that we use rotations, scaling or whatever translations with our object before we render it. But if we rotate object by 90 degrees on Y axis, don't we have to do the same thing with normal? Yes we do! That's really important actually and that's where normal matrices come to save the day! Normal matrix is a matrix used to multiply normals with and to adjust them to the transformations, that have been done. To calculate it, we have to take our model matrix and take inverse of transpose of the 3x3 part of the model matrix. There is a bit of theory behind those calculations, which I am too lazy to explain, but you can find numerous resources about it on the internet  .

.

In ShaderProgram class, I have created a convenience method called setModelAndNormalMatrix, which does exactly that - it sets model matrix plus it calculates the normal matrix and sets it as well! So let's have a look at some of the actual rendering code, for example the one, that renders rotating crates:

void OpenGLWindow::renderScene()

{

// ...

for (const auto& position : cratePositions)

{

const auto crateSize = 8.0f;

auto model = glm::translate(glm::mat4(1.0f), position);

model = glm::translate(model, glm::vec3(0.0f, crateSize / 2.0f + 3.0f, 0.0f));

model = glm::rotate(model, rotationAngleRad, glm::vec3(1.0f, 0.0f, 0.0f));

model = glm::rotate(model, rotationAngleRad, glm::vec3(0.0f, 1.0f, 0.0f));

model = glm::rotate(model, rotationAngleRad, glm::vec3(0.0f, 0.0f, 1.0f));

model = glm::scale(model, glm::vec3(crateSize, crateSize, crateSize));

// This part here is important for correct normals rendering

mainProgram.setModelAndNormalMatrix(model);

TextureManager::getInstance().getTexture("crate").bind(0);

cube->render();

}

// ...

}

As I like to do everything object-oriented, I have created two new classes - one is AmbientLight and another one is DiffuseLight. They are both inherited from ShaderStruct class, that looks like this:

struct ShaderStruct

{

public:

virtual void setUniform(ShaderProgram& shaderProgram, const std::string& uniformName) const = 0;

protected:

static std::string constructAttributeName(const std::string& uniformName, const std::string& attributeName);

};

The idea is really simple - we want to be able to set any structure in shader simply by calling the setUniform method. The constructAttributeName function simply builds a full name of an attribute in struct - if struct is called diffuseLight and attribute is factor, then the complete name it builds is diffuseLight.factor. This will be re-used in many places, so that's why it is in separate function  . Now let's examine DiffuseLight class a bit, so that you get better understanding of this class:

. Now let's examine DiffuseLight class a bit, so that you get better understanding of this class:

struct DiffuseLight : ShaderStruct

{

DiffuseLight(const glm::vec3& color, const glm::vec3& direction, const float factor, const bool isOn = true);

void setUniform(ShaderProgram& shaderProgram, const std::string& uniformName) const override;

glm::vec3 color; //!< Color of the diffuse light

glm::vec3 direction; //!< Direction of the diffuse light

float factor; //!< Factor to multiply dot product with (strength of light)

bool isOn; //!< Flag telling, if the light is on

};

And now setUniform method:

void DiffuseLight::setUniform(ShaderProgram& shaderProgram, const std::string& uniformName) const

{

shaderProgram[constructAttributeName(uniformName, "color")] = color;

shaderProgram[constructAttributeName(uniformName, "direction")] = direction;

shaderProgram[constructAttributeName(uniformName, "factor")] = factor;

shaderProgram[constructAttributeName(uniformName, "isOn")] = isOn;

}

You can see, that we actually set each member of the struct one by one. We already have methods for setting primitives like float or integer, so this is not a problem. Then in the renderScene method again, setting diffuse light and ambient light has never been easier  :

:

void OpenGLWindow::renderScene()

{

// ...

ambientLight.setUniform(mainProgram, ShaderConstants::ambientLight());

diffuseLight.setUniform(mainProgram, ShaderConstants::diffuseLight());

// ...

}

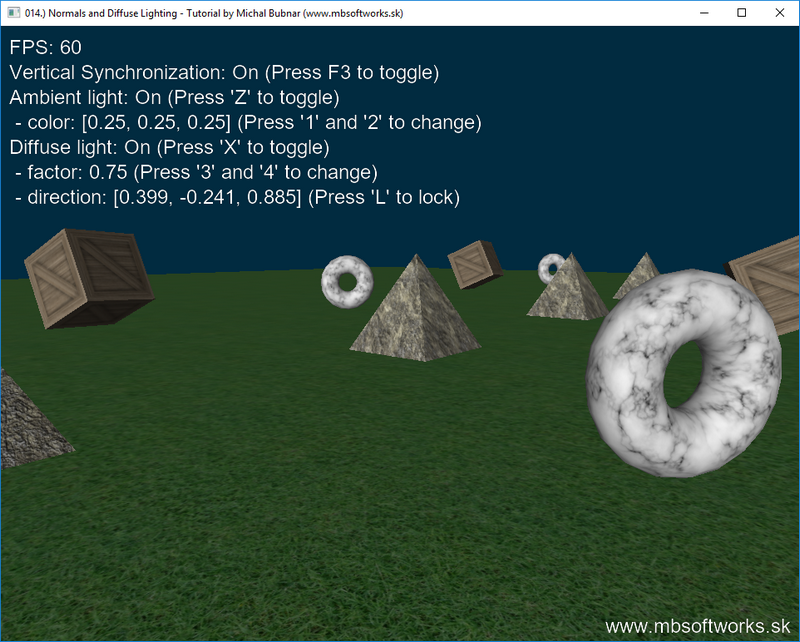

This is the fruit of today's effort:

Wow, that's really awesome result I have to say! Don't forget to play with all the keys that I have provided and experiment with different combinations of ambient / diffuse light with different parameters - this way it will help you to understand it better. The coolest feature is the direction lock - if you press 'L' key, the light direction will be locked to the same direction your camera is looking. This way, you can see, that the objects are lit differently from different directions!

As always, I hope you've enjoyed this article and albeit it has been a bit longer, I hope you learned something new! It's really hard to go very deeply in detail about everything, there's just always those small implementation details that matter, but try to play around / experiment with code to get the grasp of it  .

.

Download 3.35 MB (1119 downloads)

Download 3.35 MB (1119 downloads)