Hi there and welcome to the 25th tutorial from my OpenGL4 series! This one discusses how to implement particle system effectively - something that can simulate thousands of small particles to achieve some cool effect (like fire - fire consists of many small fire particles). We will use OpenGL feature called transform feedback to achieve this - this way the particles will be managed by the GPU, that is by nature capable of handling things in parallel  . So without further ado, let's get into the tutorial!

. So without further ado, let's get into the tutorial!

Before we get to the actual implementation, let's discuss what this transform feedback actually is. It's a process, where you capture (or record) things that you have just rendered. So after rendering is done, you have recorded things you rendered (but only what you're interested in) and you can use that data for anything you want.

I guess it's easy to understand, but how exactly is this supposed to help us with particle system? The trick is, that we will "render" vertices, which represent particles and the shaders will update the vertices (particles) during the render process and record the updated particles. These updated particles will be re-used again to render next frame! The idea is relatively simple  .

.

How to bring this idea to life? We will just need two kinds of shader programs - one shader program for updating (and also generating) particles and one shader program for rendering particles. Rendering is a bit easier, but in order to render something, we first need to generate some particles. In this tutorial, I have implemented two different particle systems - fire and snow. I will explain fire in a very detailed way and leave the snow implementation to the reader (it's very similar, concepts are same). So let's start with fire update shader program  .

.

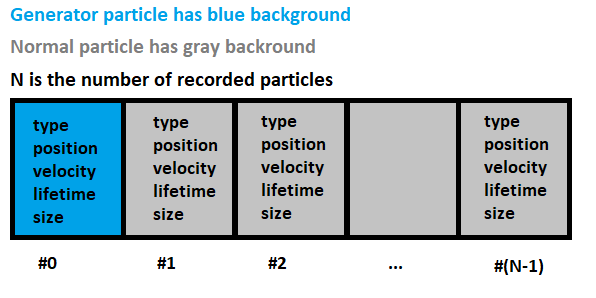

I'm not really sure in which order to explain the whole concept, so I will try this - without any prior knowledge, let's just accept the fact that one vertex = one particle ok? And because by now you should know the concept of vertices having arbitrary attributes, these attributes will be just particle attributes  . So let's take fire particle and see what properties it has:

. So let's take fire particle and see what properties it has:

Now if we render "vertices", a.k.a. particles as points, we can use power of geometry shader to change the attributes of our particles! Not only that, geometry shader will also generate new particles! So our update shader program will consist of vertex shader (to pass initial attributes) and geometry shader (to alter existing attributes and generate particles). Let's examine them both:

This one is pretty simple and easy to understand - all it does is that it passes all vertex attributes further to the geometry shader:

#version 440 core

layout (location = 0) in int inType;

layout (location = 1) in vec3 inPosition;

layout (location = 2) in vec3 inVelocity;

layout (location = 3) in float inLifetime;

layout (location = 4) in float inSize;

out int ioType;

out vec3 ioPosition;

out vec3 ioVelocity;

out float ioLifetime;

out float ioSize;

void main()

{

ioType = inType;

ioPosition = inPosition;

ioVelocity = inVelocity;

ioLifetime = inLifetime;

ioSize = inSize;

}

Does it need further explanation? I don't really think so  .

.

More interesting things happen in the geometry shader. Let's go through it part after part:

#version 440 core

#include "../common/random.glsl"

const int PARTICLE_TYPE_GENERATOR = 0;

const int PARTICLE_TYPE_NORMAL = 1;

layout(points) in;

layout(points) out;

layout(max_vertices = 64) out;

// All that we get from vertex shader

in int ioType[];

in vec3 ioPosition[];

in vec3 ioVelocity[];

in float ioLifetime[];

in float ioSize[];

// All that we send further and that is recorded by transform feedback

out int outType;

out vec3 outPosition;

out vec3 outVelocity;

out float outLifetime;

out float outSize;

//...

Let's start off easily  . Apart from including shader for random functions (which will be covered later), we define what are the inputs and outputs of the shader. When working with geometry shaders, the inputs are always treated as arrays, but we will just use inputs at index 0. With layout(points) in we tell, that the inputs to the shader are points. With layout(points) out we tell, that the output primitive type is simply a point. Finally, with layout(max_vertices = 64) out we hint OpenGL, that we don't plan to output more than 64 vertices at once (in other words - bursts of generated particles won't exceed 64 particles). After that, the series of in variables are just input and series of out variables are matching output attributes.

. Apart from including shader for random functions (which will be covered later), we define what are the inputs and outputs of the shader. When working with geometry shaders, the inputs are always treated as arrays, but we will just use inputs at index 0. With layout(points) in we tell, that the inputs to the shader are points. With layout(points) out we tell, that the output primitive type is simply a point. Finally, with layout(max_vertices = 64) out we hint OpenGL, that we don't plan to output more than 64 vertices at once (in other words - bursts of generated particles won't exceed 64 particles). After that, the series of in variables are just input and series of out variables are matching output attributes.

Just one last note to the max_vertices - this number has different limits on different GPUs and its value can be read with constant GL_MAX_GEOMETRY_OUTPUT_VERTICES. I haven't tested, but if you exceed this value and emit more vertices, I suppose they will just get ignored.

// Position where new particles are generated

uniform vec3 generatedPositionMin;

uniform vec3 generatedPositionRange;

// Velocity of newly generated particles

uniform vec3 generatedVelocityMin;

uniform vec3 generatedVelocityRange;

// Size of newly generated particles

uniform float generatedSizeMin;

uniform float generatedSizeRange;

// Lifetime of newly generated particles

uniform float generatedLifetimeMin;

uniform float generatedLifetimeRange;

// Time passed since last frame (in seconds)

uniform float deltaTime;

// How many particles should be generated during this pass - if greater than zero, then particles are generated

uniform int numParticlesToGenerate;

//...In this part, we have all properties for generating particles. For example, you can set the minimal position and the range for generating position. Same conceptworks for generated velocity, size and lifetime. What do I mean by range? Let's take a simple example with generating lifetime. We want generated lifetime to be between 0.5 and 1.5 seconds. This means:

So from minimal and maximal value, we have kept minimal possible value and the range spanning from that value! Why is it done like this? Because it's a lot faster to precalculate this value rather than calculate this difference (range) for every particle. It's a small optimization, but I think it really adds up with many many particles  . Other than that, there is one more uniform variable called numParticlesToGenerate, which is related to generating of particles (covered in next paragraph):

. Other than that, there is one more uniform variable called numParticlesToGenerate, which is related to generating of particles (covered in next paragraph):

void main()

{

initializeRandomNumberGeneratorSeed();

// First, check if the incoming type of particle is generator

outType = ioType[0];

if(outType == PARTICLE_TYPE_GENERATOR)

{

// If it's the case, always emit generator particle further

EmitVertex();

EndPrimitive();

// And now generate random particles, if numParticlesToGenerate is greater than zero

for(int i = 0; i < numParticlesToGenerate; i++)

{

outType = PARTICLE_TYPE_NORMAL;

outPosition = randomVectorMinRange(generatedPositionMin, generatedPositionRange);

outVelocity = randomVectorMinRange(generatedVelocityMin, generatedVelocityRange);

outLifetime = randomFloatMinRange(generatedLifetimeMin, generatedLifetimeRange);

outSize = randomFloatMinRange(generatedSizeMin, generatedSizeRange);

EmitVertex();

EndPrimitive();

}

return;

}

// If we get here, this means we deal with normal fire particle

// Update its lifetime first and if it survives, emit the particle

outLifetime = ioLifetime[0] - deltaTime;

if(outLifetime > 0.0)

{

outVelocity = ioVelocity[0];

outPosition = ioPosition[0] + outVelocity * deltaTime;

outSize = ioSize[0];

EmitVertex();

EndPrimitive();

}

}This is possibly the most important part of this tutorial as this is where the magic happens. Remember the type of particle? In my tutorial, we have two types of particles:

If the particle is a generator, then we ALWAYS emit it further and sometimes additional new particles together with it. We don't care about other attributes when it's generator, so its position, velocity, size and lifetime don't even matter. Because we always emit generator further, we ensure that new particles will keep getting generated. But how do we know if we want to generate additional particles? Ths is controlled by the numParticlesToGenerate and this is set from outside. In our program, we keep track of generation time and when the time comes, we set this value to something above zero. Most of the frames, it's just 0, which means no new particles will be emitted. But every few frames, burst of particles is generated  .

.

Important trick here is, that we just have a few generators among particles. In this tutorial it's only 1 single generator even and it's always the first particle in the stream of particles!

If the particle is a normal particle, then we have to update all of its attributes. We start with outLifetime, because this determines the destiny of the particle  . If the particle still has some lifetime left after update, we update its other attributes like position and emit it further. If not, we don't even update anything, thus sparing additional computational time

. If the particle still has some lifetime left after update, we update its other attributes like position and emit it further. If not, we don't even update anything, thus sparing additional computational time  .

.

Now that we have processed all particles with the update shader program, it's time to learn how to record those output data to a buffer with transform feedback.

Emitted updated particles must be recorded and that's where transform feedback comes to play. When you render together with transform feedback, the output geometry (in our case particles) can be recorded one by one into a buffer! But we will need two buffers - one serving as a source of particles and another one serving to capture updated particles. This is so called double buffering and it's a common trick. One frame, we read from buffer A and write to buffer B and another frame we read from buffer B (updated particles) and write to buffer A. Those two buffers keep alternating  .

.

Our update process starts off with buffer A, that contains only one particle. And of course, that particle is a generator. Without it, our updating would be over immediately  . But before we start it, we need to tell OpenGL, which attributes we want to record during transform feedback. This is done with a function glTransformFeedbackVaryings. What is very important here is, that this has to be done before linking the shader program. The reason is, that linker has to check if what you want to record is really present in the shader program. Even if we want to record all of the out attributes, we have to explicitly tell it to OpenGL. These are the arguments this function takes:

. But before we start it, we need to tell OpenGL, which attributes we want to record during transform feedback. This is done with a function glTransformFeedbackVaryings. What is very important here is, that this has to be done before linking the shader program. The reason is, that linker has to check if what you want to record is really present in the shader program. Even if we want to record all of the out attributes, we have to explicitly tell it to OpenGL. These are the arguments this function takes:

To call this function in case of fire particle system, we would have to do it like this:

const char* variableNames[] =

{

"outType",

"outPosition",

"outVelocity",

"outLifetime",

"outSize",

}

glTransformFeedbackVaryings(updateShaderProgram.getShaderProgramID(), 5, variableNames, GL_INTERLEAVED_ATTRIBS);This would record all of our particles into the buffer, one by one, sequentially like this:

Of course, it would not be very practical to create such C style array manually, so I have implemented a higher level approach. We have a method addRecordedVariable, that adds name of the variable to a list of recorded variables. And from this list, we can build a C-style array easily! Notice, that this function takes two parameters - name of the recorded attribute and optional boolean meaning, if this attribute is needed for rendering.

What does it mean? Well, some attributes might not be needed for rendering and thus we want to set up things without them. Take velocity as an example. We simply won't render velocity of particle. Albeit possible, in the tutorial we just need position, size and lifetime of particle (to implement fire fade out). For that reason, we will keep two pairs of VAOs - one pair of VAOs is used for updating particles and one pair is used for rendering particles. Again, it's just another mini optimization, but hey - every little help counts when it comes to real time graphics!

For now, I won't get into details how to set the transform feedback with our buffers up, it's only important that you get the big picture  . Let's examine the render shader program now, that takes particles as input and renders them.

. Let's examine the render shader program now, that takes particles as input and renders them.

This one is pretty simple and easy to understand - all it does is that it passes all vertex attributes further to the geometry shader:

#version 440 core

layout (location = 1) in vec3 inPosition;

layout (location = 3) in float inLifetime;

layout (location = 4) in float inSize;

out float ioLifetime;

out float ioSize;

void main()

{

gl_Position = vec4(inPosition, 1.0);

ioLifetime = inLifetime;

ioSize = inSize;

}

There is one thing worth mentioning though. Can you see, that we don't need two attributes here at all? We skip attribute 0 and 2. What are they? The type and velocity! That's right - for rendering, we don't need neither type (you'll see, that the generator particles won't even reach rendering) and velocity (we are not displaying speed of particles visually in any way). Again, this should lead to more effective rendering  . And all the information is stored in a separate VAO for rendering only.

. And all the information is stored in a separate VAO for rendering only.

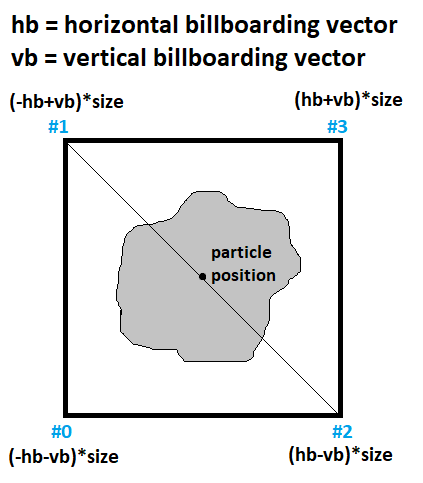

Here is where the particles get transformed from a single vertex to a nice quad  :

:

#version 440 core

layout(std140, binding = 0) uniform MatricesBlock

{

mat4 projectionMatrix;

mat4 viewMatrix;

} block_matrices;

layout(points) in;

layout(triangle_strip) out;

layout(max_vertices = 4) out;

// All that we get from vertex shader

in float ioLifetime[];

in float ioSize[];

// Color of particles

uniform vec3 particlesColor;

// Vectors to render particles as billboards facing camera

uniform vec3 billboardHorizontalVector;

uniform vec3 billboardVerticalVector;

// Output variables

smooth out vec2 ioTexCoord;

smooth out vec4 ioEyeSpacePosition;

flat out vec4 ioColor;

void main()

{

vec3 particlePosition = gl_in[0].gl_Position.xyz;

float size = ioSize[0];

mat4 mVP = block_matrices.projectionMatrix * block_matrices.viewMatrix;

// Mix particles color with alpha calculated from particle lifetime

ioColor = vec4(particlesColor, min(1.0, ioLifetime[0]));

// Emit bottom left vertex

vec4 bottomLeft = vec4(particlePosition+(-billboardHorizontalVector-billboardVerticalVector)*size, 1.0);

ioTexCoord = vec2(0.0, 0.0);

gl_Position = mVP * bottomLeft;

ioEyeSpacePosition = block_matrices.viewMatrix * bottomLeft;

EmitVertex();

// Emit top left vertex

vec4 topLeft = vec4(particlePosition+(-billboardHorizontalVector+billboardVerticalVector)*size, 1.0);

ioTexCoord = vec2(0.0, 1.0);

gl_Position = mVP * topLeft;

ioEyeSpacePosition = block_matrices.viewMatrix * topLeft;

EmitVertex();

// Emit bottom right vertex

vec4 bottomRight = vec4(particlePosition+(billboardHorizontalVector-billboardVerticalVector)*size, 1.0);

ioTexCoord = vec2(1.0, 0.0);

gl_Position = mVP * bottomRight;

ioEyeSpacePosition = block_matrices.viewMatrix * bottomRight;

EmitVertex();

// Emit top right vertex

vec4 topRight = vec4(particlePosition+(billboardHorizontalVector+billboardVerticalVector)*size, 1.0);

ioTexCoord = vec2(1.0, 1.0);

gl_Position = mVP * topRight;

ioEyeSpacePosition = block_matrices.viewMatrix * topRight;

EmitVertex();

// And finally end the primitive

EndPrimitive();

}

To explain what's happening here, let's examine layout part first. We receive points and we output triangle strip. But because we plan to transform every particle into a single quad, we can tell OpenGL with confidence, that we won't output more than 4 vertices  . Going further, we can see input variables, particles color and two uniform vectors, names of which start with word billboard. What is this for? In general, billboarding is a rendering technique, that renders an object in a way, that it always faces camera. In order to do so, we need a function, that accepts camera view and up vector as parameters and calculates horizontal and vertical vector for billboarding. The function is implemented in TransformFeedbackParticleSystem class and it looks like this:

. Going further, we can see input variables, particles color and two uniform vectors, names of which start with word billboard. What is this for? In general, billboarding is a rendering technique, that renders an object in a way, that it always faces camera. In order to do so, we need a function, that accepts camera view and up vector as parameters and calculates horizontal and vertical vector for billboarding. The function is implemented in TransformFeedbackParticleSystem class and it looks like this:

void TransformFeedbackParticleSystem::calculateBillboardingVectors(const glm::vec3 &cameraViewVector, const glm::vec3& cameraUpVector)

{

billboardHorizontalVector_ = glm::normalize(glm::cross(cameraViewVector, cameraUpVector));

billboardVerticalVector_ = glm::normalize(glm::cross(cameraViewVector, -billboardHorizontalVector_));

}Having these two vectors, we can then render every particle in a following manner (text with blue color represents emiting order):

Idea depicted above is implemented in the main method of the geometry shader. With those two vectors, we emit bottom-left, top-left, bottom-right and top-right vertices (in this exact order) and because we output triangle strips, the result is a quad consisting of two triangles. This quad is always facing us, because it's rendered with billboarding vectors calculated from camera properties  .

.

One more thing to mention - can you see how the output color is calculated? Alpha (transparency) of particle is calculated from lifetime of particles. The code just says, that if lifetime is more than one second, we want particle to be fully opaque. Once it drops below 1.0, it starts to fade away slowly. This way, particles disappear seamlessly!

The last part that we should go through is in the fragment shader:

#version 440 core

#include "../fog/fog.frag"

smooth in vec2 ioTexCoord;

smooth in vec4 ioEyeSpacePosition;

flat in vec4 ioColor;

uniform sampler2D sampler;

uniform FogParameters fogParams;

out vec4 outputColor;

void main()

{

vec4 textureColor = texture(sampler, ioTexCoord);

vec4 inputColor = ioColor;

// If fog is enabled, mix input color with fog

if(fogParams.isEnabled)

{

float fogCoordinate = abs(ioEyeSpacePosition.z / ioEyeSpacePosition.w);

float fogFactor = getFogFactor(fogParams, fogCoordinate);

inputColor = vec4(mix(ioColor.xyz, fogParams.color, fogFactor), ioColor.a * (1.0 - fogFactor));

}

outputColor = vec4(textureColor.xyz, 1.0) * inputColor;

}

In this shader, we just basically take the color calculated in geometry shader and mix it with texture and pass it further. But I had to do things a bit more complicated, why not  . Because this tutorial was being written before Christmas 2020, I wanted to have nice winter scenery and I wanted to apply fog so hard! And if the fog is enabled, we have to incorporate fog to our particles. I have found a way that looks acceptable, but I found out that it's more difficult than I thought

. Because this tutorial was being written before Christmas 2020, I wanted to have nice winter scenery and I wanted to apply fog so hard! And if the fog is enabled, we have to incorporate fog to our particles. I have found a way that looks acceptable, but I found out that it's more difficult than I thought  . I was just tinkering with equations until I was satisfied. You can enable fog and see it for yourself, how it behaves (I think it's very nice)

. I was just tinkering with equations until I was satisfied. You can enable fog and see it for yourself, how it behaves (I think it's very nice)  .

.

To make this tutorial complete, let's have a look at the initialization of particle system in a step by step manner:

bool TransformFeedbackParticleSystem::initialize()

{

// If the initialization went successfully in the past, just return true

if (isInitialized_) {

return true;

}

// Add two default recorded attributes

addRecordedInt("outType", false);

addRecordedVec3("outPosition");

// If the initialization of shaders and recorded variables fails, don't continue

if (!initializeShadersAndRecordedVariables()) {

return false;

}

// Now that all went through just fine, let's generate all necessary OpenGL objects

generateOpenGLObjects();

isInitialized_ = true;

return true;

}First, we start off by gathering list of recorded variables. Two basic ones that will be present probably always are type and position, however type is also marked with false to indicate we don't need it for rendering. Then we proceed with specific initialization of particle system (fire has different programs and attributes than snow, also they have different sets of recorded variables). This is all hidden in abstract method initializeShadersAndRecordedVariables. In this method, we set up also the responsible shader programs for updating and rendering. This is very important, because before linking them, you have to tell OpenGL which variables we want to record during transform feedback. This whole idea is implemented in the ShaderProgram method setTransformFeedbackRecordedVariables

void ShaderProgram::setTransformFeedbackRecordedVariables(const std::vector& recordedVariablesNames, const GLenum bufferMode) const

{

std::vector recordedVariablesNamesPtrs;

for (const auto& recordedVariableName : recordedVariablesNames) {

recordedVariablesNamesPtrs.push_back(recordedVariableName.c_str());

}

glTransformFeedbackVaryings(_shaderProgramID, recordedVariablesNamesPtrs.size(), recordedVariablesNamesPtrs.data(), bufferMode);

}

This method is very practical, because it internally converts our high-level structures into low-level C language counterparts, which OpenGL requires  . After this part, there is last method generateOpenGLObjects. As this method is pretty long, I won't show it here, but in summary, it just creates all objects necessary for the transform feedback to work. Here is a list of the objects:

. After this part, there is last method generateOpenGLObjects. As this method is pretty long, I won't show it here, but in summary, it just creates all objects necessary for the transform feedback to work. Here is a list of the objects:

This function calls another method of the RecordedVariable class - enableAndSetupVertexAttribPointer. This is quite an important one, so I'll show it here:

void TransformFeedbackParticleSystem::RecordedVariable::enableAndSetupVertexAttribPointer(GLuint index, GLsizei stride, GLsizeiptr byteOffset) const

{

glEnableVertexAttribArray(index);

if (glType == GL_INT)

{

// If OpenGL type is an integer or consists of integers, the glVertexAttribIPointer must be called (notice the I letter)

glVertexAttribIPointer(index, count, glType, stride, (const GLvoid*)byteOffset);

}

else

{

// If OpenGL type is float or consists of floats, the glVertexAttribPointer must be called

glVertexAttribPointer(index, count, glType, GL_FALSE, stride, (const GLvoid*)byteOffset);

}

}It calls a function that we should already know - glVertexAttribPointer. However, there are multiple versions of this functions and it took me quite a while to figure out, why some of my code didn't work as I expected. There are simply different versions of these functions for floating point and for integer types (check the link). And that's why in our method, we must differentiate between the types as well.

The code below is responsible for updating particles:

void TransformFeedbackParticleSystem::updateParticles(const float deltaTime)

{

// Can't render if system is not initialized

if (!isInitialized_) {

return;

}

// Calculate write buffer index

const auto writeBufferIndex = 1 - readBufferIndex_;

// Prepare update of particles

prepareUpdateParticles(deltaTime);

// Bind transform feedback object, VAO for updating particles and tell OpenGL where to store recorded data

glBindTransformFeedback(GL_TRANSFORM_FEEDBACK, transformFeedbackID_);

glBindVertexArray(updateVAOs_[readBufferIndex_]);

glBindBufferBase(GL_TRANSFORM_FEEDBACK_BUFFER, 0, particlesVBOs_[writeBufferIndex]);

// Update particles with special update shader program and also observe how many particles have been written

// Discard rasterization - we don't want to render this, it's only about updating

glEnable(GL_RASTERIZER_DISCARD);

glBeginQuery(GL_TRANSFORM_FEEDBACK_PRIMITIVES_WRITTEN, numParticlesQueryID_);

glBeginTransformFeedback(GL_POINTS);

glDrawArrays(GL_POINTS, 0, numberOfParticles_);

glEndTransformFeedback();

glEndQuery(GL_TRANSFORM_FEEDBACK_PRIMITIVES_WRITTEN);

glGetQueryObjectiv(numParticlesQueryID_, GL_QUERY_RESULT, &numberOfParticles_);

// Swap read / write buffers for the next frame

readBufferIndex_ = writeBufferIndex;

// Unbind transform feedback and restore normal rendering (don't discard anymore)

glBindTransformFeedback(GL_TRANSFORM_FEEDBACK, 0);

glDisable(GL_RASTERIZER_DISCARD);

}

Basically we do following - a transform feedback object is bound using glBindTransformFeedback. With glBindBufferBase, you tell OpenGL, which buffer should we record the output data into. Because it's updating phase, we don't need to display anything yet, that's what glEnable(GL_RASTERIZER_DISCARD) does. Then we proceed with beginning querying for number of particles and transform feedback process itself with glBeginQuery and glBeginTransformFeedback. Finally we render particles as points and we can end both transform feedback and query with glEndTransformFeedback and glEndQuery. We should find out, how many particles have been recorded during transform feedback. This is done with glGetQueryObjectiv method. At the end, we need to swap read and write buffer and finally re-enable rasterization by calling glDisable(GL_RASTERIZER_DISCARD)  .

.

Unlike updating of particles, rendering is a piece of cake really  . The particles' data are now in buffer and all we need to do is to issue rendering call.

. The particles' data are now in buffer and all we need to do is to issue rendering call.

void TransformFeedbackParticleSystem::renderParticles()

{

// Can't render if system is not initialized

if (!isInitialized_) {

return;

}

// Prepare rendering and then render from index 1 (at index 0, there is always generator)

prepareRenderParticles();

glBindVertexArray(renderVAOs_[readBufferIndex_]);

glDrawArrays(GL_POINTS, 1, numberOfParticles_ - 1);

}Two important things to mention here - we use VAOs for rendering, not updating. Updating ones would work too, but with rendering ones, we don't enable unnecessary vertex attributes like velocity. Moreover, we render starting from index 1. Why is that? Because first particle is generator, that's how we initialized our buffer! That's why we don't even need particle type, because generator particle won't ever make it to rendering!

All concepts that I've explained in the fire particle system hold true for snow particle system. What's different here is, that snow particles have one additional integer attribute - snowflake index. My snowflakes texture looks like this:

As you can see, it has 4 different snowflakes. I have numbered them from 0 to 3 - from top to bottom and left to right. This way, we can render different snowflakes and the whole effect just looks a lot better! I won't really explain snowflakes in a big detail, because the ideas are almost identical, but I encourage you to have a look at snow particle system shaders

As promised, I will briefly go through the random.glsl shader program:

#version 440 core

uniform vec3 initialRandomGeneratorSeed; // Initial RNG seed

vec3 currentRandomGeneratorSeed; // Current RNG seed

float randomFloat()

{

uint n = floatBitsToUint(currentRandomGeneratorSeed.y * 214013.0 + currentRandomGeneratorSeed.x * 2531011.0 + currentRandomGeneratorSeed.z * 141251.0);

n = n * (n * n * 15731u + 789221u);

n = (n >> 9u) | 0x3F800000u;

float result = 2.0 - uintBitsToFloat(n);

currentRandomGeneratorSeed = vec3(currentRandomGeneratorSeed.x + 147158.0 * result,

currentRandomGeneratorSeed.y * result + 415161.0 * result,

currentRandomGeneratorSeed.z + 324154.0 * result);

return result;

}

void initializeRandomNumberGeneratorSeed()

{

currentRandomGeneratorSeed = initialRandomGeneratorSeed;

}

// More functions here, check the whole shader online

This is just an excerpt from it, but it provides functions to generate random floats, integers, and vectors. Why do we need that? Unfortunately, GLSL doesn't have any kind of random function, so we have to implement own. The idea is, that we set the random number generator seed as vec3 from outside of the program (where we have an access to random functions) and then internally we go on with some pseudo-random number generation  . Honestly, this code isn't mine written from the scratch, I just found it somewhere on Internet back in 2012 and have used it since then, because it works very well. I just extended it to generate vectors and integers.

. Honestly, this code isn't mine written from the scratch, I just found it somewhere on Internet back in 2012 and have used it since then, because it works very well. I just extended it to generate vectors and integers.

Here is a link to the full shader - random.glsl

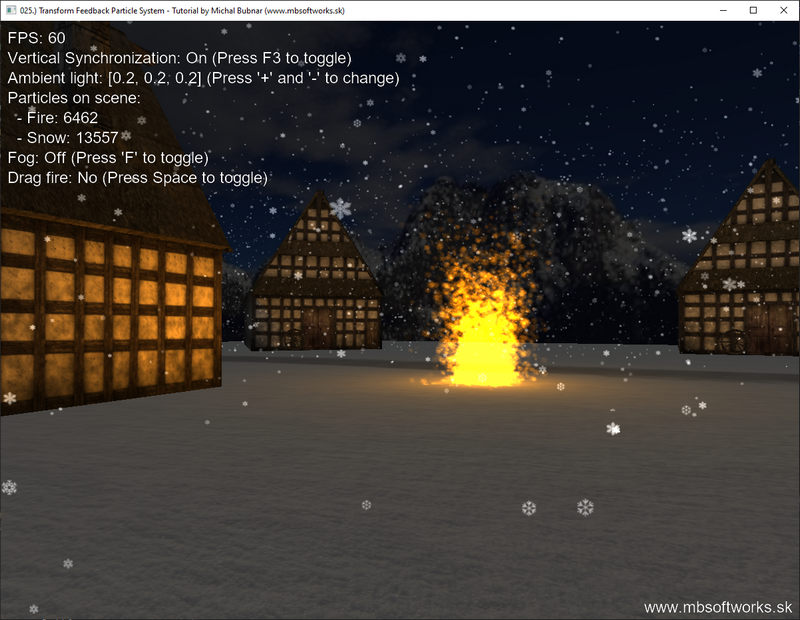

This is what has been achieved in this tutorial:

Whoa! Now that was literally the longest article I've ever written! The problem is, that particle systems is a pretty complex topic and it can't be explained in bunch of sentences. I really hope that you enjoyed this article and learned new techniques  . You can build upon this and make particle system with multiple generators, generating more particles and similar stuff. Creativity has no bounds

. You can build upon this and make particle system with multiple generators, generating more particles and similar stuff. Creativity has no bounds  .

.

Download 6.33 MB (819 downloads)

Download 6.33 MB (819 downloads)