Hello my fellow readers and welcome to the 30th tutorial of my OpenGL 4 series! This one took me really long to write as I have just realized, that once you have a family, your free time diminishes to practically zero  ! Especially in these days when my little son started to walk, you just have to watch him because everything happens to be dangerous now

! Especially in these days when my little son started to walk, you just have to watch him because everything happens to be dangerous now  . But anyway, piece by piece I have written this article so let's get into the basics of animation, this time it's a keyframe animation with MD2 model file format

. But anyway, piece by piece I have written this article so let's get into the basics of animation, this time it's a keyframe animation with MD2 model file format  .

.

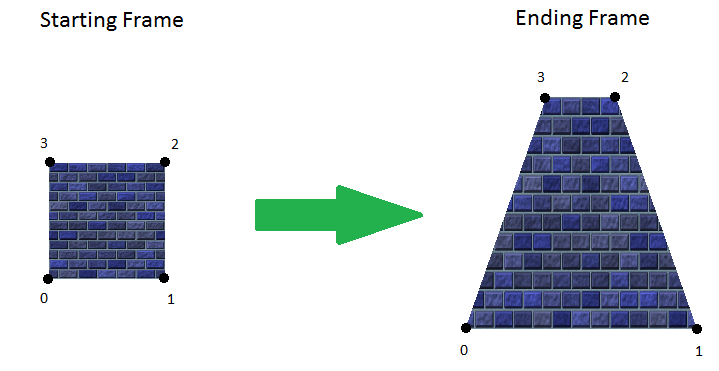

Keyframe animation is one of the easiest animation methods and also one of the first methods developed to animate 3D models. The idea is to have starting and ending frame and then smoothly change from the starting and ending frame over some time. And if you combine several keyframes, you get an animation! If you think it through and combine frames in a certain way, you can even create an animation which can be looped (e.g. walking animation)!

Let's take a very simple example - if a model should get from the starting to ending frame in 2 seconds, then intermediate model calculated dynamically is like this:

As you can see, we are able to calculate every single frame in between the starting and ending frame just by having the total animation running time (2 seconds in this case) and time passed since the start of the animation. Every value in-between has been calculated dynamically using LINEAR INTERPOLATION. There are other types of interpolation actually (for example spherical), but this time we use the simplest one.

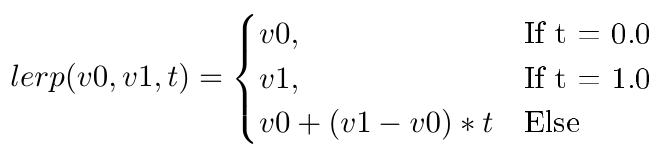

Given 2 endpoint values v0 and v1 (in our case two vertices) and a parameter t in range <0..1>, linear interpolation gives us arbitrary point on the line segment defined by these two endpoints, controlled by parameter t:

So basically what is happening here is that we go from one point to another across a straight line, thus the name LINEar interpolation. How do we apply it to the animation then? We do this for every single vertex in the model! For every single vertex that is in starting frame, we also have one in the ending frame and thus we are able to do linear interpolation between two frames! This doesn't apply only for vertex positions - this way, we can also animate texture coordinates or maybe even normals! Not sure if normals would make sense though  .

.

However, having only two keyframes for animation would really not be very nice. Imagine an animation where you rotate something. For example, if we could rotate our heads by 180 degrees (we can't, but now imagine we can  ), the animation of two keyframes would look like this: The face would get sucked into the head itself and then reappeared on the other side of head. So when making keyframe animation, two keyframes are usually not enough. But if we approximate animation by 20 frames or even more and then interpolate between keyframes consecutively one by one, the animation will look smoother and better!

), the animation of two keyframes would look like this: The face would get sucked into the head itself and then reappeared on the other side of head. So when making keyframe animation, two keyframes are usually not enough. But if we approximate animation by 20 frames or even more and then interpolate between keyframes consecutively one by one, the animation will look smoother and better!

MD2 file doesn't actually store the animations, instead it stores the (key)frames only. However every frame has a name usually and that name is very often in a format like run001, run002, run003 etc., implying that those frames belong to the run animation. However from my research it turns out, that all the metadata regarding animation (e.g. transition times) are not part of the file, they are provided from elsewhere. For instance, it seems that the models used in Quake 2 had all same animations (like standardized), for example walk, run, crouch etc. They all had same number of frames and same order in MD2 file. And then in the C code, the transition times have been defined, that is outside of the model file (which stored frames only, no metadata about animations whatsoever). We'll get to that later  .

.

So let's make a very fast walkthrough from the MD2 file format before going into loading it.

MD2 File Format is a little bit complex and explaining every single structure would be very exhausting. Especially when there is already an article explaining it pretty much in detail. It's an article by MD2 specs and lots of structures used in my tutorial are taken / inspired from here. So before reading further, try to read through his article first and then armed with knowledge you will have it easier to understand loading and animating MD2 files in the tutorial. On this link, you can find the whole MD2 model class:

Megabyte Softworks MD2 Model ClassAs you can see, there are several sub-structures there, I will try to give a brief overview of them here:

Before we can animate anything, we have to load the model. This is done within the loadModel() function. I won't go into the nitty-gritty details here, but will try to give you a big overview how the loading is done.

Now that we have model loaded in our structures, let's discuss the way animation is being done. The main idea is to perform the animation on the GPU (in the shaders) instead of on client side (CPU). The problem with animation is that they require every intermediate frame to be calculated on-the-fly based on the animation state and that's a costly process. I do it in the vertex shader:

#version 440 core

uniform struct

{

mat4 projectionMatrix;

mat4 viewMatrix;

mat4 modelMatrix;

mat3 normalMatrix;

} matrices;

layout (location = 0) in vec3 vertexPosition;

layout (location = 1) in vec2 vertexTexCoord;

layout (location = 2) in vec3 vertexNormal;

layout (location = 3) in vec3 nextVertexPosition;

layout (location = 4) in vec3 nextVertexNormal;

smooth out vec2 ioVertexTexCoord;

smooth out vec3 ioVertexNormal;

smooth out vec4 ioWorldPosition;

smooth out vec4 ioEyeSpacePosition;

uniform float interpolationFactor;

void main()

{

mat4 mvMatrix = matrices.viewMatrix * matrices.modelMatrix;

mat4 mvpMatrix = matrices.projectionMatrix * mvMatrix;

vec4 interpolatedPosition = vec4(vertexPosition + (nextVertexPosition - vertexPosition)*interpolationFactor, 1.0);

vec3 interpolatedNormal = vertexNormal + (nextVertexNormal - vertexNormal)*interpolationFactor;

gl_Position = mvpMatrix*interpolatedPosition;

ioVertexTexCoord = vertexTexCoord;

ioEyeSpacePosition = mvMatrix * interpolatedPosition;

ioVertexNormal = matrices.normalMatrix * interpolatedNormal;

ioWorldPosition = matrices.modelMatrix * interpolatedPosition;

}

The main idea is to provide vertices from the current and next frame together with the interpolation factor, which is calculated and passed from the client. Once we do this, the actual interpolated vertices / normals are pretty easy to calculate. This shader can then be combined with an arbitrary fragment shader, because it only alters the vertex and normals, but not the color of pixels! By the way, this is one of few cases in my tutorials where we do a bit more in the vertex shaders than just passing down vertex position and a normal  .

.

Last very important thing to discuss is how to update the animation and calculate the interpolation factor. This is done in the updateAnimation method:

void MD2Model::AnimationState::updateAnimation(const float deltaTime)

{

if(startFrame == endFrame) {

return;

}

totalRunningTime += deltaTime;

const float oneFrameDuration = 1.0f / static_cast(fps);

while(totalRunningTime - nextFrameTime > oneFrameDuration)

{

nextFrameTime += oneFrameDuration;

currentFrameIndex = nextFrameIndex;

nextFrameIndex++;

if (nextFrameIndex > endFrame) {

nextFrameIndex = loop ? startFrame : endFrame;

}

}

interpolationFactor = static_cast(fps) * (totalRunningTime - nextFrameTime);

} First of all, there is just a validity check, whether the given animation is really valid and if startFrame and endFrame are same, then there is nothing to animate (can't interpolate between the same frames). After this, we update total animation running time by the delta time since the last render. If the total running time surpasses the time to reach the next frame, we need to update frame counters so that vertex shader gets correct current and next frame vertices.

The last and the most important thing is to calculate interpolation factor, but this is an easy oneliner. Maximal difference between the totalRunningTime and nextFrameTime can be 1.0f / animState->fps, or oneFrameDuration. We just need to stretch this value back to range 0...1, so we simply re-multiplicate it with fps and we're done.

Finally, we should discuss how to render the model, especially animated model. To do so, we have to properly setup the vertices and normals based on the animation state. Here is the method setupVAO that does this based on the current and next frame:

void MD2Model::setupVAO(size_t currentFrame, size_t nextFrame)

{

if(currentFrame >= static_cast(header_.numFrames) || nextFrame >= static_cast(header_.numFrames)) {

return;

}

glBindVertexArray(vao_);

const auto currentFrameByteOffset = currentFrame * verticesPerFrame_ * sizeof(glm::vec3);

const auto nextFrameByteOffset = nextFrame < static_cast(header_.numFrames) ? nextFrame * verticesPerFrame_ * sizeof(glm::vec3) : currentFrameByteOffset;

// Setup pointers to vertices for current and next frame to perform interpolation

vboFrameVertices_.bindVBO();

glEnableVertexAttribArray(POSITION_ATTRIBUTE_INDEX);

glVertexAttribPointer(POSITION_ATTRIBUTE_INDEX, 3, GL_FLOAT, GL_FALSE, sizeof(glm::vec3), reinterpret_cast(currentFrameByteOffset));

glEnableVertexAttribArray(NEXT_POSITION_ATTRIBUTE_INDEX);

glVertexAttribPointer(NEXT_POSITION_ATTRIBUTE_INDEX, 3, GL_FLOAT, GL_FALSE, sizeof(glm::vec3), reinterpret_cast(nextFrameByteOffset));

// Setup pointers to texture coordinates

vboTextureCoordinates_.bindVBO();

glEnableVertexAttribArray(TEXTURE_COORDINATE_ATTRIBUTE_INDEX);

glVertexAttribPointer(TEXTURE_COORDINATE_ATTRIBUTE_INDEX, 2, GL_FLOAT, GL_FALSE, sizeof(glm::vec2), nullptr);

// Setup pointers to normals for current and next frame to perform interpolation

vboNormals_.bindVBO();

glEnableVertexAttribArray(NORMAL_ATTRIBUTE_INDEX);

glVertexAttribPointer(NORMAL_ATTRIBUTE_INDEX, 3, GL_FLOAT, GL_FALSE, sizeof(glm::vec3), reinterpret_cast(currentFrameByteOffset));

glEnableVertexAttribArray(NEXT_NORMAL_ATTRIBUTE_INDEX);

glVertexAttribPointer(NEXT_NORMAL_ATTRIBUTE_INDEX, 3, GL_FLOAT, GL_FALSE, sizeof(glm::vec3), reinterpret_cast(nextFrameByteOffset));

} It sets the vertices of the current and next frame but only if the frame indices are within existing frames (just some validity checks again). If the model is rendered statically (no animation), we can pass in same frame indices (e.g. 0, 0), which will result in frame 0 being rendered:

void MD2Model::renderModelStatic()

{

if(!isLoaded())

{

std::cout << "MD2 model has not been loaded, cannot render it!" << std::endl;

return;

}

skinTexture_.bind();

setupVAO(0, 0);

ShaderProgramManager::getInstance().getShaderProgram("md2")[ShaderConstants::interpolationFactor()] = 0;

GLint totalOffset = 0;

for (size_t i = 0; i < renderModes.size(); i++) // Just render using previously extracted render modes

{

glDrawArrays(renderModes[i], totalOffset, numRenderVertices[i]);

totalOffset += numRenderVertices[i];

}

}And when it's rendered as animated, we pass indices from the AnimationState class:

void MD2Model::renderModelAnimated(const AnimationState& animationState)

{

if (!isLoaded())

{

std::cout << "MD2 model has not been loaded, cannot render it!" << std::endl;

return;

}

auto& shaderProgram = ShaderProgramManager::getInstance().getShaderProgram("md2");

skinTexture_.bind();

// Setup vertex attributes for current and next frame

setupVAO(animationState.currentFrame, animationState.nextFrame);

shaderProgram[ShaderConstants::interpolationFactor()] = animationState.interpolationFactor;

GLint totalOffset = 0;

for (size_t i = 0; i < renderModes.size(); i++)

{

glDrawArrays(renderModes[i], totalOffset, numRenderVertices[i]);

totalOffset += numRenderVertices[i];

}

}As I have mentioned earlier, there is no universal way of determining the animations in the file, because MD2 file only contains frames. However many models that you can find on the Internet follow the Quake 2 conventions and they will have following animation list, regardless of frame names:

void MD2Model::useQuake2AnimationList()

{

animations_.clear();

animationNamesCached_.clear();

addNewAnimation("Stand", 0, 39, 9);

addNewAnimation("Run", 40, 45, 10);

addNewAnimation("Attack", 46, 53, 10);

addNewAnimation("Pain A", 54, 57, 7);

addNewAnimation("Pain B", 58, 61, 7);

addNewAnimation("Pain C", 62, 65, 7);

addNewAnimation("Jump", 66, 71, 7);

addNewAnimation("Flip", 72, 83, 7);

addNewAnimation("Salute", 84, 94, 7);

addNewAnimation("Fall Back", 95, 111, 10);

addNewAnimation("Wave", 112, 122, 7);

addNewAnimation("Pointing", 123, 134, 6);

addNewAnimation("Crouch Stand", 135, 153, 10);

addNewAnimation("Crouch Walk", 154, 159, 7);

addNewAnimation("Crouch Attack", 160, 168, 10);

addNewAnimation("Crouch Pain", 169, 172, 7);

addNewAnimation("Crouch Death", 173, 177, 5);

addNewAnimation("Death Fall Back", 178, 183, 7);

addNewAnimation("Death Fall Forward", 184, 189, 7);

addNewAnimation("Death Fall Back Slow", 190, 197, 7);

}

So if you are aware that the model uses the list of the animations above, you can just overwrite the automatically parsed animation list with the list above  .

.

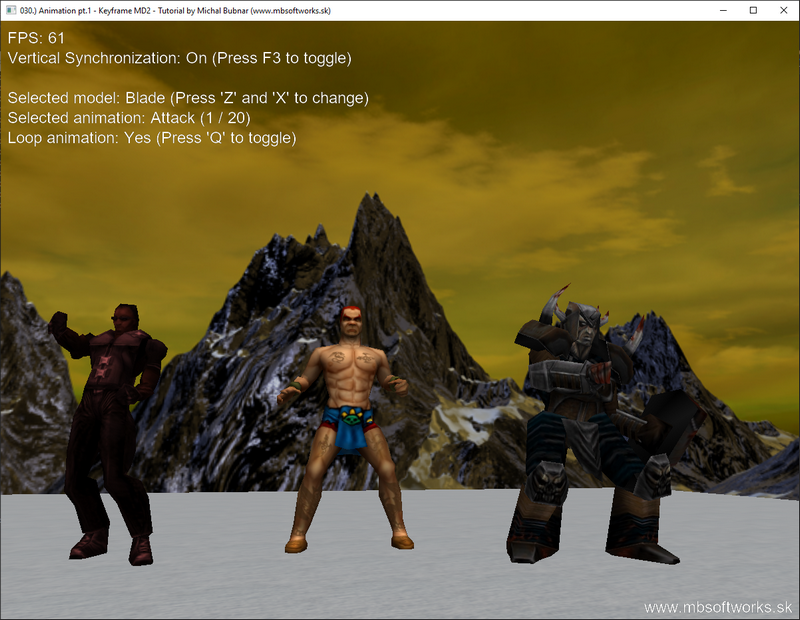

Here you can see what has been achieved:

I have to admit that after 5 months of writing a thing or two every wook, I am really glad that this article is finised  . With a child, I just don't find that much time anymore and that's why I still did not explain everything deeply. However I really try to write code in a self-explanatory manner, so that method and variables names can really help to understand. Also the animation is really not a matter for few lines of text, therefore some self study and experimenting around is more than recommended

. With a child, I just don't find that much time anymore and that's why I still did not explain everything deeply. However I really try to write code in a self-explanatory manner, so that method and variables names can really help to understand. Also the animation is really not a matter for few lines of text, therefore some self study and experimenting around is more than recommended  .

.

If you think that MD2 file format is somehow strange and cryptic, you are not alone  ! But that's only because it was written in the 90's and that's really long time ago. Back then computers didn't have that many resources and you really couldn't afford to waste a MB of disk space, let alone few hundreds of them! Programmers simply had to squeeze as much as possible from the little they have been given. Today we can afford to waste disk space and RAM even a simple software that does practically nothing can eat hundreds of MBs of RAM memory, however it wasn't always like that

! But that's only because it was written in the 90's and that's really long time ago. Back then computers didn't have that many resources and you really couldn't afford to waste a MB of disk space, let alone few hundreds of them! Programmers simply had to squeeze as much as possible from the little they have been given. Today we can afford to waste disk space and RAM even a simple software that does practically nothing can eat hundreds of MBs of RAM memory, however it wasn't always like that  .

.

I also provide few links where you can still find the MD2 resource, especially models and some other texts:

Download 6.36 MB (449 downloads)

Download 6.36 MB (449 downloads)